| blog

Kobus Rust

January 09, 2026

All Roads Lead to Simulation

In most actuarial teams, good ideas do not fail because they are wrong. They fail because they are slow and difficult to test.

Fragmented workflows introduce friction at every step. Data needs to be moved, results reconciled, logic reimplemented. Over time, that friction creates inertia. Teams test fewer ideas, issues surface later, and improvement slows down.

This matters because actuarial work is not linear. It is a continuous cycle of exploration, modelling, testing, deployment, monitoring, and refinement. The only thing that touches every stage of that cycle is analysis.

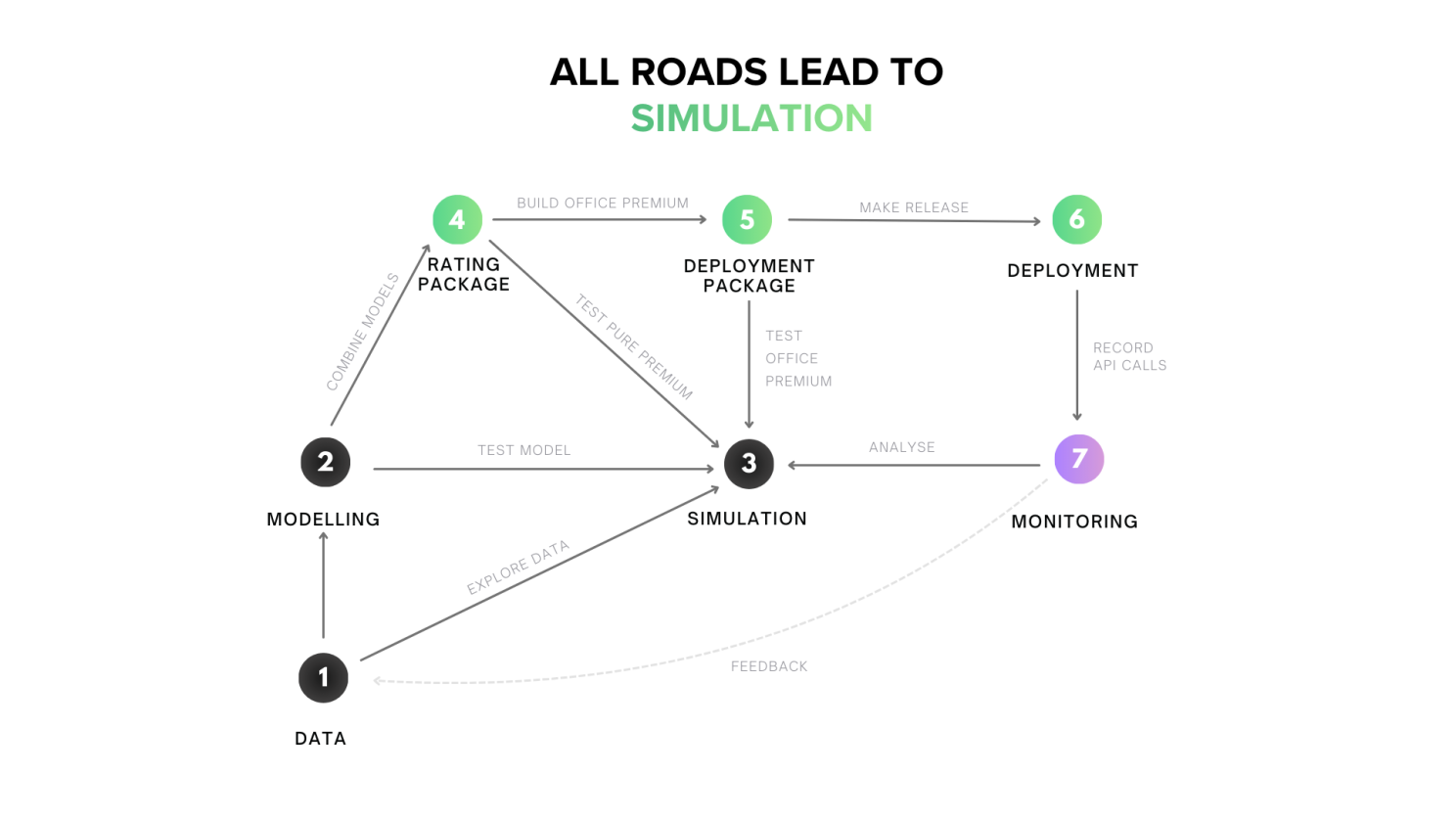

We built our platform around five modules that mirror this workflow: Data for connecting sources, Modelling for building risk and demand models, Simulation for analytics and testing, Deployment for publishing rates, and Monitoring for real-time performance tracking. But Simulation sits at the centre. It's where actuaries spend most of their time: exploring data, validating models, analysing portfolios, and preparing for production.

We call it Simulation because actuarial work is fundamentally about testing scenarios. Not just Monte Carlo simulation. Any time you ask "what if we changed this parameter?" or "how would this portfolio perform under that model?" you're simulating.

Why analysis comes first

When we receive a new dataset, the first instinct is rarely to model. We open it in Excel (or Python if it doesn't fit) to understand what's going on. We pull pivots, examine distributions, check trends, and investigate data quality. This step matters more than any modelling technique, because poor data undermines even the most sophisticated models.

Everything starts with data. Everything ends with data too, because our models are abstractions of the real world and we need to understand what they are actually saying. Testing scenarios against data is how we connect those two ends.

That insight shaped how we designed our platform.

The problem with traditional workflows

In most organisations, each stage of the actuarial workflow lives in a different tool.

Data exploration happens in Excel. Modelling happens in Python or R. Rating packages live in legacy systems. Deployment runs through production infrastructure. Monitoring sits somewhere else again.

A simple rerate becomes a multi-step journey. Extract the book from the policy admin system. Import it into the rating tool. Export results. Analyse them in Excel. Spot an issue. Go back to the beginning.

Each transition introduces friction. Data needs reformatting. Numbers need reconciling. Assumptions drift across files. You wait for batch processes to finish. Over time, this friction creates inertia.

The result is not worse models. It is fewer ideas tested, issues discovered later, and slower improvement.

We built our platform to eliminate that friction.

Simulation as the centre of the workflow

The architecture mirrors the actuarial workflow: Data connects to policy admin systems and data warehouses, Modelling provides environments for fitting transparent Machine Learning models, Simulation handles all analytical testing, Deployment manages production rating APIs, and Monitoring tracks real-time performance. But Simulation sits at the centre. It's where you test ideas before they reach production.

Simulation is where data exploration, model behaviour, rating logic, and portfolio outcomes meet.

Crucially, Simulation does not depend on having advanced or fully specified models. You can extract real value from it long before model development begins.

You do not need to fit your models in Modelling to use Simulation. You do not need to deploy through Deployment either. If you already have models built elsewhere, you can import them into Simulation and immediately start testing: simulate their outputs, compare structures, stress test assumptions, and analyse portfolio impact without changing how you work today.

Using the platform end to end is simply more seamless. Everything shares the same execution logic and data context, which reduces duplication and prevents drift. But Simulation itself stands on its own.

This allows teams to adopt the workflow incrementally rather than all at once.

Exploration before modelling

Because Simulation is available from the outset, insight does not depend on committing to a modelling approach.

You can simulate directly from raw data connected through Data to understand exposure distributions, loss patterns, or data quality. The constraint in most environments isn't can I calculate a Gini coefficient, it's can I calculate it on 47 different segments of the data without writing a loop and waiting. In Simulation, you can. Pull deciles, examine distributions, pivot by any dimension, and generate diagnostics like Lift charts in minutes, even across portfolios with millions of policies.

Ad-hoc exploration becomes genuinely fast, and the focus stays on understanding the data rather than preparing it for another tool.

Fast, iterative model development

Once modelling begins in Modelling, Simulation becomes the testing ground. Fit a model. Simulate the book through it. Examine the results. Something looks off? Adjust relativities, refine segmentation, update risk models in Modelling, and simulate again.

Consider a team testing a new peril-based rating structure. In a traditional workflow, they might spend hours: export the book, run it through the rating model, import results to Excel, spot that coastal properties are underpriced, adjust the model, and start over. In Simulation, that same iteration takes minutes. Adjust the coastal loading, re-simulate the portfolio, examine the loss ratio distribution, and test another adjustment, all in the same session.

Speed matters here. When rerating takes hours, iteration becomes cautious. When it takes minutes, teams stay in flow. More ideas get tested and problems surface earlier.

Whether models are fitted inside Modelling or imported from elsewhere, Simulation provides a consistent lens for evaluation.

Comparing rating structures and strategies

As models mature, they are combined into rating packages. This is where Simulation becomes essential for testing.

You might want to understand how different perils contribute to portfolio risk. You might want to compare a frequency-severity approach to a pure premium model under identical assumptions. You might want to test alternative peril splits, loadings, or pricing changes for specific channels or risk groups.

Simulation lets you stress test these alternatives side by side. Pull Gini coefficients, examine decile performance, and review loss ratio distributions without switching tools or duplicating logic.

The value is not just in the metrics, but in being able to see where different approaches agree and where they diverge. Those divergences are often the most informative part of the analysis.

Renewals and portfolio optimisation

The same Simulation engine supports core business processes.

For renewals, you load the book, apply updated assumptions, and immediately see the impact. Which policies face increases, where retention risk sits, and how the combined ratio shifts.

For portfolio optimisation, you can identify underperforming segments and test targeted pricing changes. Simulate different scenarios by region, peril mix, or policy characteristics. Because iteration is fast and friction is low, you can explore dozens of strategies in a single session.

But testing alone isn't enough. Ideas need to reach production.

Deployment with parity

Many workflows break down at deployment. Models built across multiple tools need to be translated into production systems. That translation introduces errors. What worked in analysis behaves differently in production.

For teams that choose to deploy through Deployment, the same execution logic used in Simulation runs in production. There is no reimplementation and no translation layer.

What you tested in Simulation is exactly what gets deployed.

Teams that continue deploying through existing systems can still use Simulation as their analytical backbone, without changing their production architecture.

Closing the feedback loop

Once pricing is live, Monitoring captures real-time performance through API logs and production data feeds. Actual calls and experience are tracked as they happen.

When patterns emerge (higher claims than expected or lower conversion in a segment), you can load that data directly into Simulation without export steps. Pull the same diagnostics you used during development and test potential fixes immediately.

As experience accumulates, models can be recalibrated in Modelling and the impact simulated across the entire book. Improvement becomes continuous rather than annual.

Why this matters

Fragmented workflows slow teams down and hide insight. The cost is not just operational. It is missed opportunity.

By centring the actuarial workflow around Simulation and removing friction between stages, teams regain momentum. Ideas get tested earlier. Good models reach production faster. Feedback loops tighten.

Simulation isn't built for everything. Hyperparameter tuning and complex optimisation routines still belong in specialised environments. But for the core of actuarial analytical work (exploring data, testing models, comparing structures, analysing portfolios), Simulation provides the speed and flexibility to stay in flow.

Actuarial work is a cycle. Simulation is what keeps that cycle moving.

That is the workflow we built for.